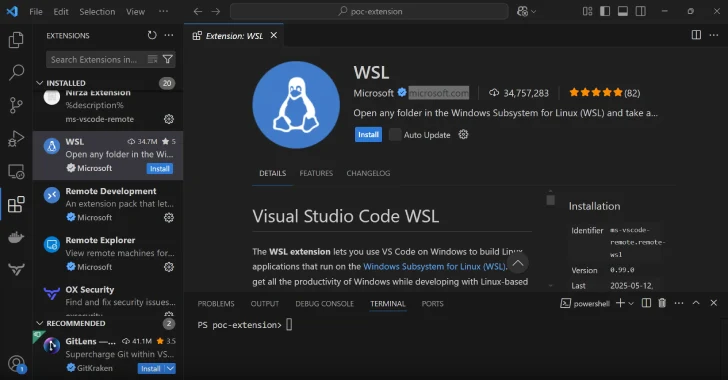

“This newly recognized vulnerability exploited unsuspecting customers who undertake an agent containing a pre-configured malicious proxy server uploaded to ‘Immediate Hub’ (which is towards LangChain ToS),” the Noma Safety’s researchers wrote. “As soon as adopted, the malicious proxy discreetly intercepted all person communications — together with delicate information comparable to API keys (together with OpenAI API Keys), person prompts, paperwork, pictures, and voice inputs — with out the sufferer’s information.”

The LangChain workforce has since added warnings to brokers that comprise customized proxy configurations, however this vulnerability highlights how well-intentioned options can have critical safety repercussions if customers don’t concentrate, particularly on platforms the place they copy and run different folks’s code on their programs.

The issue, as Sonatype’s Fox talked about, is that, with AI, the chance expands past conventional executable code. Builders may extra simply perceive why operating software program parts from repositories comparable to PyPI, npm, NuGet, and Maven Central on their machines carry important dangers if these parts will not be vetted first by their safety groups. However they may not assume the identical dangers apply when testing a system immediate in an LLM or perhaps a customized machine studying (ML) mannequin shared by others.