Take a risk-based strategy to knowledge safety

Final, and most critically, organizations want to find and classify every bit of knowledge so as to perceive which property are to be acted on and when. Taking a complete scan and guaranteeing correct classification of your structured, semi-structured, and unstructured knowledge might help determine threat that’s imminent versus threat that may be de-prioritized. Past ransomware safety, identification administration and knowledge publicity controls are equally essential for accountable AI deployment. Organizations quickly adopting generative AI typically overlook the scope of entry these methods must delicate knowledge. Making certain that AI methods can solely cause over licensed and correctly secured variations of company knowledge is paramount, particularly because the regulatory panorama continues to evolve. This complete strategy to knowledge safety addresses each conventional threats and rising AI-related dangers.

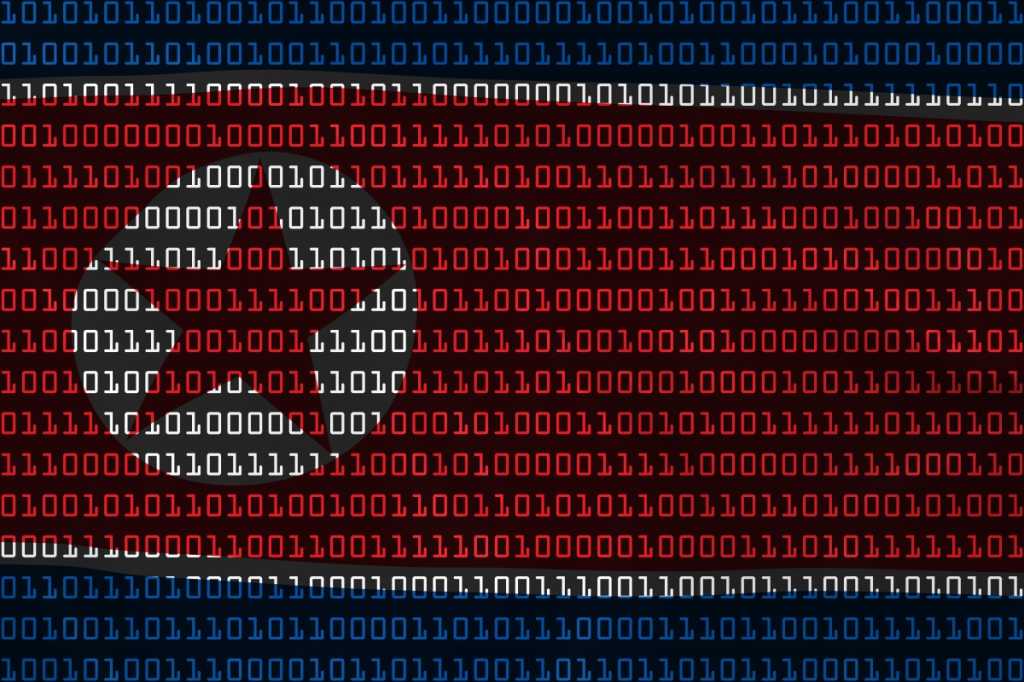

Unprecedented threats require new safety requirements and controls

The safety group faces unprecedented threats requiring coordinated motion between personal business and authorities businesses. Current assaults spotlight extreme gaps in knowledge safety requirements, particularly round AI methods. AI accelerates enterprise operations however introduces new dangers. Delicate knowledge can leak into AI fashions throughout coaching and out of delicate fashions throughout inference; as soon as a mannequin is educated, governing its outputs is non deterministic. These AI safety challenges straight relate to the identification and knowledge controls mentioned above. With out ample entry administration and knowledge classification, organizations can not stop unauthorized knowledge from coming into AI coaching pipelines and being uncovered via inference.

The present altering regulatory atmosphere provides complexity. Current adjustments to cybersecurity government orders have disrupted established collaboration frameworks between authorities and business. This coverage shift impacts how organizations develop safe AI methods and handle vulnerabilities in our nationwide safety infrastructure. One factor is for certain: The threats we face—from nation-state actors to more and more refined cybercriminal teams—gained’t await political consensus. Simply as with ransomware safety, organizations should take proactive steps to safe their AI methods no matter regulatory uncertainty.