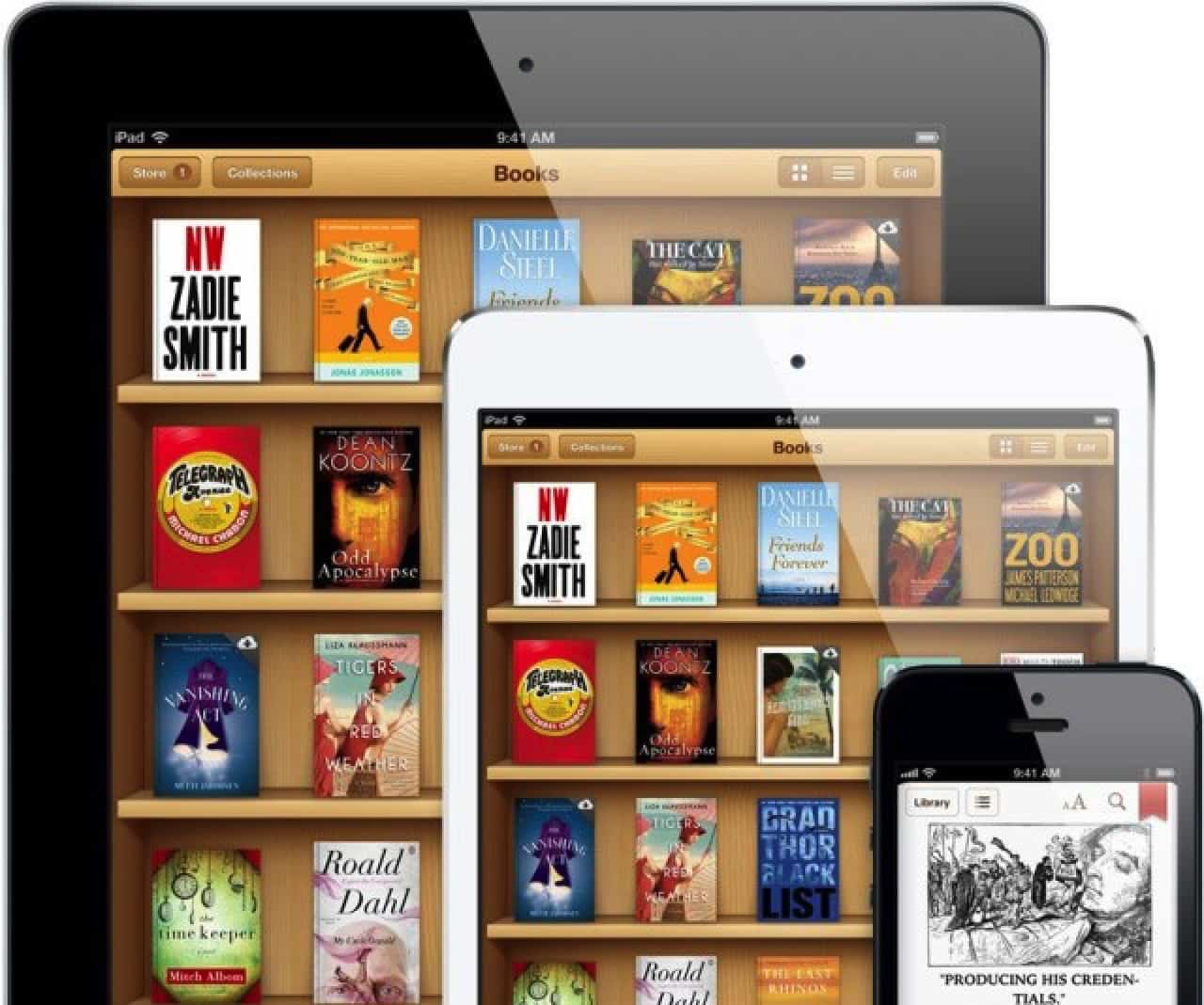

A brand new Apple-backed examine, in collaboration with Aalto College in Finland, introduces ILuvUI: a vision-language mannequin educated to know cellular app interfaces from screenshots and from pure language conversations. Right here’s what which means, and the way they did it.

ILuvUI: an AI that outperformed the mannequin it was primarily based on

Within the paper, ILuvUI: Instruction-tuned LangUage-Imaginative and prescient modeling of UIs from Machine Conversations, the group tackles a long-standing problem in human-computer interplay, or HCI: educating AI fashions to cause about person interfaces like people do, which in follow means visually, in addition to semantically.

“Understanding and automating actions on UIs is a difficult process for the reason that UI parts in a display, equivalent to record gadgets, checkboxes, and textual content fields, encode many layers of knowledge past their affordances for interactivity alone. (….) LLMs specifically have demonstrated outstanding talents to grasp process directions in pure language in lots of domains, nevertheless utilizing textual content descriptions of UIs alone with LLMs leaves out the wealthy visible data of the UI. “

Presently, because the researchers clarify, most vision-language fashions are educated on pure pictures, like canines or road indicators, so that they don’t carry out as nicely when requested to interpret extra structured environments, like app UIs:

“Fusing visible with textual data is vital to understanding UIs because it mirrors what number of people have interaction with the world. One method that has sought to bridge this hole when utilized to pure pictures are Imaginative and prescient-Language Fashions (VLMs), which settle for multimodal inputs of each pictures and textual content, sometimes output solely textual content, and permit for general-purpose query answering, visible reasoning, scene descriptions, and conversations with picture inputs. Nonetheless, the efficiency of those fashions on UI duties fall quick in comparison with pure pictures due to the shortage of UI examples of their coaching information.”

With that in thoughts, the researchers fine-tuned the open-source VLM LLaVA, and so they additionally tailored its coaching methodology to specialize within the UI area.

They educated it on text-image pairs that had been synthetically generated following a number of “golden examples”. The ultimate dataset included Q&A-style interactions, detailed display descriptions, predicted motion outcomes, and even multi-step plans (like “methods to hearken to the most recent episode of a podcast,” or “methods to change brightness settings.”)

As soon as educated on this dataset, the ensuing mannequin, ILuvUI, was in a position to outperform the unique LLaVA in each machine benchmarks and human choice assessments.

What’s extra, it doesn’t require a person to specify a area of curiosity within the interface. As an alternative, the mannequin understands the whole display contextually from a easy immediate:

ILuvUI (…) doesn’t require a area of curiosity, and accepts a textual content immediate as enter along with the UI picture, which allows it to supply solutions to be used circumstances equivalent to visible query answering.

How will customers profit from this?

Apple’s researchers say that their method may show helpful for accessibility, in addition to for automated UI testing. In addition they word that whereas ILuvUI continues to be primarily based on open elements, future work might contain bigger picture encoders, higher decision dealing with, and output codecs that work seamlessly with present UI frameworks, like JSON.

And when you’ve been protecting updated with Apple’s AI analysis papers, you is likely to be considering of a current investigation of whether or not AI fashions couldn’t simply perceive, but in addition anticipate the results of in-app actions.

Put the 2 collectively, and issues begin to get… attention-grabbing, particularly when you depend on accessibility to navigate your units, or simply want the OS might autonomously deal with the extra fiddly components of your in-app workflows.

Exterior drive offers on Amazon

FTC: We use revenue incomes auto affiliate hyperlinks. Extra.