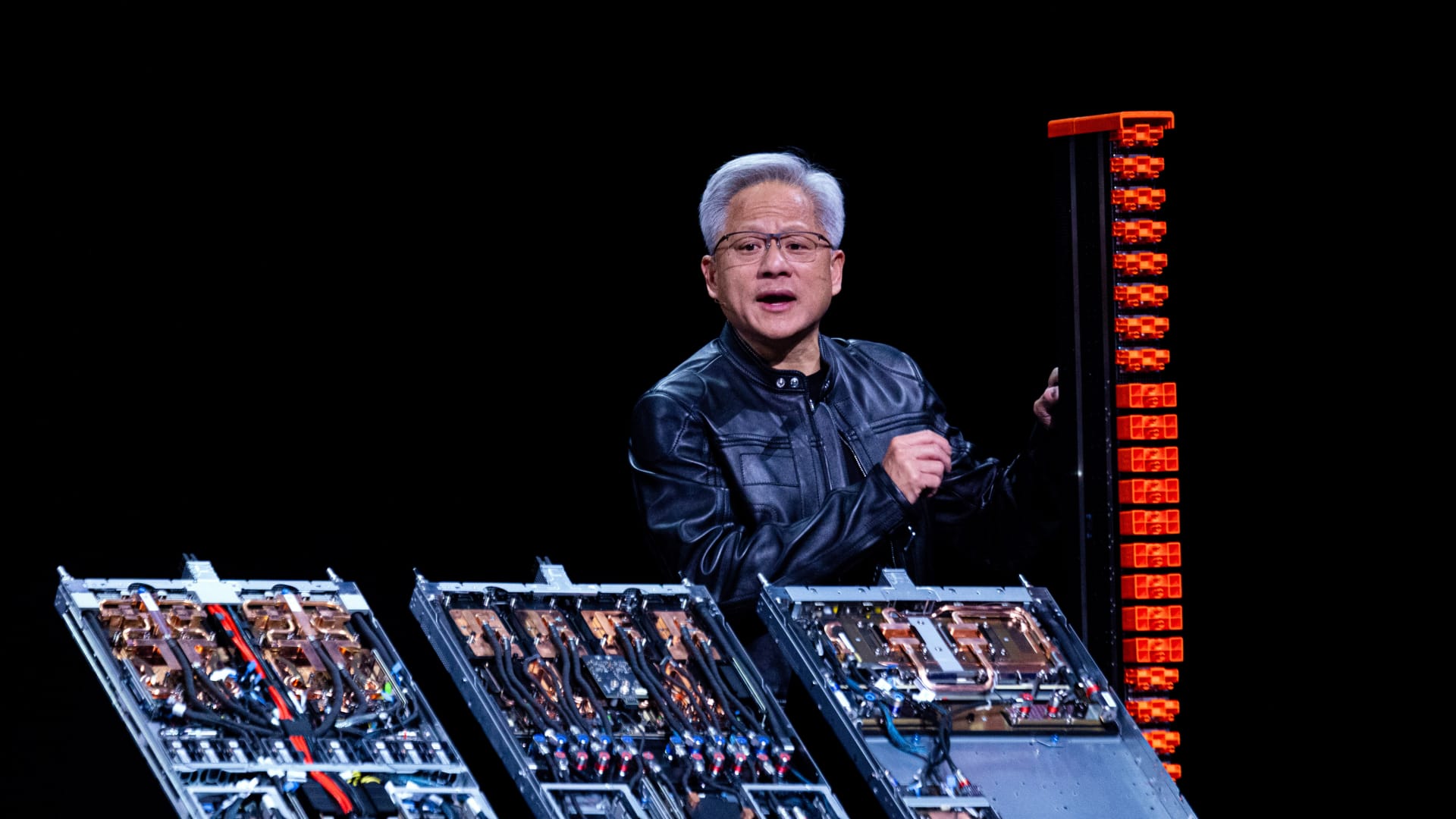

Jensen Huang, co-founder and chief govt officer of Nvidia Corp., speaks in the course of the Computex convention in Taipei, Taiwan, on Monday, Could 19, 2025.

Bloomberg | Bloomberg | Getty Photographs

Nvidia CEO Jensen Huang made a slew of bulletins and revealed new merchandise on Monday which can be geared toward retaining the corporate on the heart of synthetic intelligence growth and computing.

Probably the most notable bulletins was its new “NVLink Fusion” program, which can permit prospects and companions to make use of non-Nvidia central processing models and graphics processing models along with Nvidia’s merchandise and its NVLink.

Till now, NVLink was closed to chips made by Nvidia. NVLink is a know-how developed by Nvidia to attach and alternate information between its GPUs and CPUs.

“NV hyperlink fusion is so as to construct semi-custom AI infrastructure, not simply semi-custom chips,” Huang stated at Computex 2025 in Taiwan, Asia’s largest electronics convention.

Based on Huang, NVLink Fusion permits for AI infrastructures to mix Nvidia processors with completely different CPUs and application-specific built-in circuits (ASICs). “In any case, you get pleasure from utilizing the NV hyperlink infrastructure and the NV hyperlink ecosystem.”

Nvidia introduced Monday that AI chipmaking companions for NVLink Fusion already embrace MediaTek, Marvell, Alchip, Astera Labs, Synopsys and Cadence. Underneath NVLink Fusion, Nvidia prospects like Fujitsu and Qualcomm Applied sciences can even have the ability to join their very own third-party CPUs with Nvidia’s GPUs in AI information facilities, it added.

Ray Wang, a Washington-based semiconductor and know-how analyst, advised CNBC that the NVLink represents Nvidia’s plans to seize a share of knowledge facilities primarily based on ASICs, which have historically been seen as Nvidia opponents.

Whereas Nvidia holds a dominant place in GPUs used for common AI coaching, many opponents see room for enlargement in chips designed for extra particular functions. A few of Nvidia’s largest opponents in AI computing — that are additionally a few of its largest prospects — embrace cloud suppliers comparable to Google, Microsoft and Amazon, all of that are constructing their very own {custom} processors.

NVLink Fusion “consolidates NVIDIA as the middle of next-generation AI factories—even when these methods aren’t constructed totally with NVIDIA chips,” Wang stated, noting that it opens alternatives for Nvidia to serve prospects who aren’t constructing totally Nvidia-based methods, however want to combine a few of its GPUs.

“If broadly adopted, NVLink Fusion might broaden NVIDIA’s trade footprint by fostering deeper collaboration with {custom} CPU builders and ASIC designers in constructing the AI infrastructure of the long run,” Wang stated.

Nonetheless, NVLink Fusion does danger decreasing demand for Nvidia’s CPU by permitting Nvidia prospects to make use of alternate options, based on Rolf Bulk, an fairness analysis analyst at New Avenue Analysis.

However, “on the system degree, the added flexibility improves the competitiveness of Nvidia’s GPU-based options versus various rising architectures, serving to Nvidia to take care of its place on the heart of AI computing,” he stated.

Nvidia’s opponents Broadcom, AMD, and Intel are to date absent from the NVLink Fusion ecosystem.

Different updates

Huang opened his keynote speech with an replace on Nvidia’s next-generation of Grace Blackwell methods for AI workloads. The corporate’s “GB300,” to be launched within the third quarter of this 12 months, will provide increased total system efficiency, he stated.

On Monday, Nvidia additionally introduced the brand new NVIDIA DGX Cloud Lepton, an AI platform with a compute market that Nvidia stated will join the world’s AI builders with tens of 1000’s of GPUs from a world community of cloud suppliers.

“DGX Cloud Lepton helps handle the crucial problem of securing dependable, high-performance GPU sources by unifying entry to cloud AI providers and GPU capability throughout the NVIDIA compute ecosystem,” the corporate stated in a press launch.

In his speech, Huang additionally introduced plans for a brand new workplace in Taiwan, the place it’s going to even be constructing an AI supercomputer undertaking with Taiwan’s Foxconn, formally often called Hon Hai Know-how Group, the world’s largest electronics producer.

“We’re delighted to associate with Foxconn and Taiwan to assist construct Taiwan’s AI infrastructure, and to help TSMC and different main corporations to advance innovation within the age of AI and robotics,” Huang stated.