On this article, we are going to present a short overview of Pillar Safety’s platform to higher perceive how they’re tackling AI safety challenges.

Pillar Safety is constructing a platform to cowl the whole software program improvement and deployment lifecycle with the purpose of offering belief in AI techniques. Utilizing its holistic strategy, the platform introduces new methods of detecting AI threats, starting at pre-planning levels and going right through runtime. Alongside the best way, customers acquire visibility into the safety posture of their functions whereas enabling protected AI execution.

Pillar is uniquely suited to the challenges inherent in AI safety. Co-founder and CEO Dor Sarig comes from a cyber-offensive background, having spent a decade main safety operations for governmental and enterprise organizations. In distinction, co-founder and CTO Ziv Karlinger spent over ten years growing defensive methods, securing in opposition to monetary cybercrime and securing provide chains. Collectively, their purple team-blue staff strategy kinds the muse of Pillar Safety and is instrumental in mitigating threats.

The Philosophy Behind the Method

Earlier than diving into the platform, it is necessary to grasp the underlying strategy taken by Pillar. Quite than growing a siloed system the place each bit of the platform focuses on a single space, Pillar gives a holistic strategy. Every part inside the platform enriches the following, making a closed suggestions loop that allows safety to adapt to every distinctive use case.

The detections discovered within the posture administration part of the platform are enriched by information detected within the discovery part. Likewise, adaptive guardrails which can be utilized throughout runtime are constructed on insights from risk modeling and purple teaming. This dynamic suggestions loop ensures that stay defenses are optimized as new vulnerabilities are found. This strategy creates a robust, holistic and contextual-based protection in opposition to threats to AI techniques – from construct to runtime.

AI Workbench: Risk Modeling The place AI Begins

The Pillar Safety platform begins at what they name the AI workbench. Earlier than any code is written, this safe playground for risk modeling permits safety groups to experiment with AI use instances and proactively map potential threats. This stage is essential to make sure that organizations align their AI techniques with company insurance policies and regulatory calls for.

Builders and safety groups are guided by a structured risk modeling course of, producing potential assault eventualities particular to the applying use case. Dangers are aligned with the applying’s enterprise context, and the method is aligned with established frameworks corresponding to STRIDE, ISO, MITRE ATLAS, OWASP High Ten for LLMs, and Pillar’s personal SAIL framework. The purpose is to construct safety and belief into the design from day one.

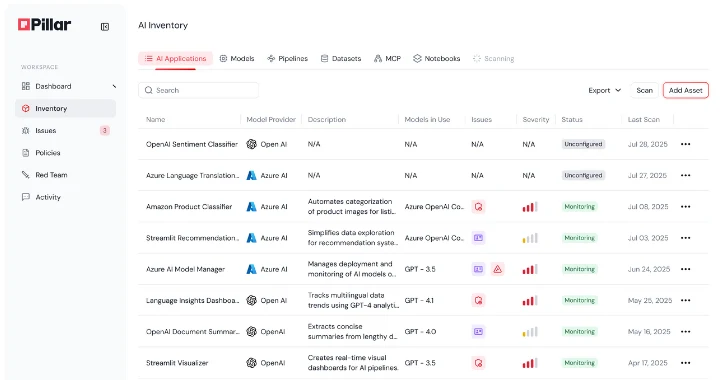

AI Discovery: Actual-Time Visibility into AI Property

AI sprawl is a fancy problem for safety and governance groups. They lack visibility into how and the place AI is getting used inside their improvement and manufacturing environments.

Pillar takes a novel strategy to AI safety that goes past the CI/CD pipeline and the normal SDLC. By integrating straight with code repositories, information platforms, AI/ML frameworks, IdPs and native environments, it could routinely discover and catalog each AI asset inside the group. The platform shows a full stock of AI apps, together with fashions, instruments, datasets, MCP servers, coding brokers, meta prompts, and extra. This visibility guides groups, serving to kind the muse of the organizational safety coverage and enabling a transparent understanding of the enterprise use case, together with what the applying does and the way the group makes use of it.

|

| Determine 1: Pillar Safety routinely discovers all AI belongings throughout the group and flags unmonitored elements to forestall safety blind spots. |

AI-SPM: Mapping and Managing AI Danger

After figuring out all AI belongings, Pillar is ready to perceive the safety posture by analyzing every of the belongings. Throughout this stage, the platform’s AI Safety Posture Administration (AI-SPM) conducts a sturdy static and dynamic evaluation of all AI belongings and their interconnections.

By analyzing the AI belongings, Pillar creates visible representations of the recognized Agentic techniques, their elements and their related assault surfaces. Moreover, it identifies provide chain, information poisoning and mannequin/immediate/instrument stage dangers. These insights, which seem inside the platform, allow groups to prioritize threats, because it present precisely how a risk actor could transfer by the system.

|

| Determine 2: Pillar’s Coverage Heart offers a centralized dashboard for monitoring enterprise-wide AI compliance posture |

AI Purple Teaming: Simulating Assaults Earlier than They Occur

Quite than ready till the applying is totally constructed, Pillar promotes a trust-by-design strategy, enabling AI groups to check as they construct.

The platform runs simulated assaults which can be tailor-made to the AI system use case, by leveraging frequent methods like immediate injections and jailbreaking to stylish assaults focusing on enterprise logic vulnerabilities. These Purple Staff actions assist determine whether or not an AI agent could be manipulated into giving unauthorized refunds, leaking delicate information, or executing unintended instrument actions. This course of not solely evaluates the mannequin, but additionally the broader agentic software and its integration with exterior instruments and APIs.

Pillar additionally gives a novel functionality by purple teaming for instrument use. The platform integrates risk modeling with dynamic instrument activation, rigorously testing how chained instrument and API calls is likely to be weaponized in practical assault eventualities. This superior strategy reveals vulnerabilities that conventional prompt-based testing strategies are unable to detect.

For enterprises utilizing third-party and embedded AI apps, corresponding to copilots, or customized chatbots the place they do not have entry to the underlying code, Pillar gives black-box, target-based purple teaming. With only a URL and credentials, Pillar’s adversarial brokers can stress-test any accessible AI software whether or not inner or exterior. These brokers simulate real-world assaults to probe information boundaries and uncover publicity dangers, enabling organizations to confidently assess and safe third-party AI techniques while not having to combine or customise them.

|

| Determine 3: Pillar’s tailor-made purple teaming checks real-world assault eventualities in opposition to an AI software’s particular use case and enterprise logic |

Guardrails: Runtime Coverage Enforcement That Learns

As AI functions transfer into manufacturing, real-time safety controls develop into important. Pillar addresses this want with a system of adaptive guardrails that monitor inputs and outputs throughout runtime, designed to implement safety insurance policies with out interrupting software efficiency.

Not like static rule units or conventional firewalls, these guardrails are mannequin agnostic, application-centric and constantly evolve. In accordance with Pillar, they draw on telemetry information, insights gathered throughout purple teaming, and risk intelligence feeds to adapt in actual time to rising assault methods. This enables the platform to regulate its enforcement primarily based on every software’s enterprise logic and habits, and be extremely exact with alerts.

Throughout the walkthrough, we noticed how guardrails could be finely tuned to forestall misuse, corresponding to information exfiltration or unintended actions, whereas preserving the AI’s meant habits. Organizations can implement their AI coverage and customized code-of-conduct guidelines throughout functions with confidence that safety and performance will coexist.

|

| Determine 4: Pillar’s adaptive guardrails monitor runtime exercise to detect and flag malicious use and coverage violations |

Sandbox: Containing Agentic Danger

One of the crucial important considerations is extreme company. When brokers can carry out actions past their meant scopes, it could result in unintended penalties.

Pillar addresses this throughout the Function section by safe sandboxing. AI brokers, together with superior techniques like coding brokers and MCP servers, run inside tightly managed environments. These remoted runtimes apply zero-trust ideas to separate brokers from important infrastructure and delicate information, whereas nonetheless enabling them to function productively. Any sudden or malicious habits is contained with out impacting the bigger system. Each motion is captured and logged intimately, giving groups a granular forensic path that may be analyzed after the very fact. With this containment technique, organizations can safely give AI brokers the room they should function.

AI Telemetry: Observability from Immediate to Motion

Safety does not cease as soon as the applying is stay. All through the lifecycle, Pillar constantly collects telemetry information throughout the whole AI stack. Prompts, agent actions, instrument calls, and contextual metadata are all logged in actual time.

This telemetry powers deep investigations and compliance monitoring. Safety groups can hint incidents from symptom to root trigger, perceive anomalous habits, and guarantee AI techniques are working inside coverage boundaries. It is not sufficient to know what occurred. It is about understanding why one thing befell and find out how to forestall it from occurring once more.

Because of the sensitivity of the telemetry information, Pillar could be deployed on the client cloud for full information management.

Remaining Ideas

Pillar stands aside by a mix of technical depth, real-world perception, and enterprise-grade flexibility.

Based by leaders in each offensive and defensive cybersecurity, the staff has a confirmed monitor file of pioneering analysis that has uncovered important vulnerabilities and produced detailed real-world assault reviews. This experience is embedded into the platform at each stage.

Pillar additionally takes a holistic strategy to AI safety that extends past the CI/CD pipeline. By integrating safety into the planning and coding phases and connecting on to code repositories, information platforms and native environments, Pillar features early and deep visibility into the techniques being constructed. This context allows extra exact threat evaluation and extremely focused purple staff testing as improvement progresses.

The platform is powered by the business’s largest AI risk intelligence feed, enriched by over 10 million real-world interactions. This risk information fuels automated testing, threat modeling, and adaptive defenses that evolve with the risk panorama.

Lastly, Pillar is constructed for versatile deployment. It may well run on premises, in hybrid environments, or totally within the cloud, giving clients full management over delicate information, prompts, and proprietary fashions. This can be a important benefit for regulated industries the place information residency and safety are paramount.

Collectively, these capabilities make Pillar a robust and sensible basis for safe AI adoption at scale, serving to modern organizations handle AI-specific dangers and acquire belief of their AI techniques.