A brand new research led by Dr. Vadim Axelrod, of the Gonda (Goldschmied) Multidisciplinary Mind Analysis Middle at Bar-Ilan College, has revealed critical issues in regards to the high quality of information collected on Amazon Mechanical Turk’s (MTurk)—a platform broadly used for behavioral and psychological analysis.

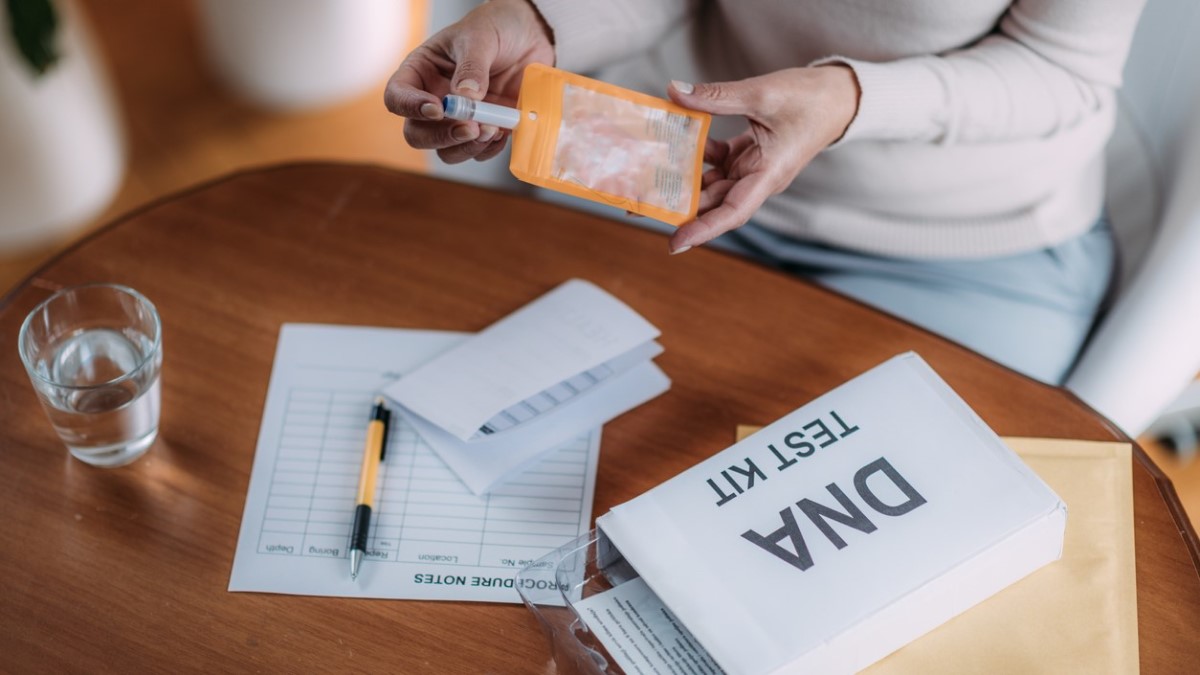

MTurk, a web based crowdsourcing market the place people full small duties for fee, has served as a key useful resource for researchers for over 15 years. Regardless of earlier issues about participant high quality, the platform stays standard inside the educational neighborhood. Dr. Axelrod’s workforce got down to rigorously assess the present high quality of information produced by MTurk individuals.

The research, involving over 1,300 individuals throughout major and replication experiments, employed an easy however highly effective methodology: repeating an identical questionnaire objects to measure response consistency. “If a participant is dependable, their solutions to repeated questions needs to be constant,” added Dr. Axelrod. As well as, the research included various kinds of “attentional catch” questions that needs to be simply answered by any attentive respondent.

The findings, simply printed in Royal Society Open Science, had been stark: the vast majority of individuals from MTurk’s basic employee pool failed the eye checks and demonstrated extremely inconsistent responses, even when the pattern was restricted to customers with a 95% or larger approval score.

“It is onerous to belief the info of somebody who claims a runner is not drained after finishing a marathon in extraordinarily scorching climate or {that a} most cancers prognosis would make somebody glad,” Dr. Axelrod famous.

“The individuals didn’t lack the information to reply such attentional catch questions—they simply weren’t paying adequate consideration. The implication is that their responses to the primary questionnaire could also be equally random.”

In contrast, Amazon’s elite “Grasp” employees—chosen by Amazon primarily based on excessive efficiency throughout earlier duties—constantly produced high-quality information. The authors suggest utilizing Grasp employees for future analysis, making an allowance for that these individuals are way more skilled and much fewer in quantity.

“Dependable information is the muse of any empirical science,” stated Dr. Axelrod. “Researchers must be totally knowledgeable in regards to the reliability of their participant pool. Our findings recommend that warning is warranted when utilizing MTurk’s basic pool for behavioral analysis.”

Extra data:

Assessing the standard and reliability of the Amazon Mechanical Turk (MTurk) information in 2024, Royal Society Open Science (2025). DOI: 10.1098/rsos.250361. royalsocietypublishing.org/doi/10.1098/rsos.250361

Quotation:

Analysis highlights unreliable responses from most Amazon MTurk customers, aside from ‘grasp’ employees (2025, July 15)

retrieved 15 July 2025

from https://medicalxpress.com/information/2025-07-highlights-unreliable-responses-amazon-mturk.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.