As I identified lately, whereas Whisper is high of thoughts and nonetheless a reasonably good transcription mannequin, OpenAI has moved away from it. That mentioned, the truth that Apple’s new transcription API is sooner than Whisper is nice information. However how correct is it? We examined it out.

Full disclosure: the concept for this publish got here from developer Prakash Pax, who did his personal checks. As he explains it:

I recorded 15 audio samples in English, randomly starting from 15 seconds to 2 minutes. And examined in opposition to these 3 speech-to-text instruments.

- Apple’s New Transcription APIs

- openAI Whisper Massive v3 Turbo

- Eleven Lab’s scribe v1

I gained’t embrace his outcomes right here, in any other case you’d don’t have any cause to go to his fascinating publish and test it out for your self.

However he did add this caveat about his methodology. “I’m non-native English speaker. So the outcomes would possibly barely differ for others,” and his checks bought me curious concerning how Apple and OpenAI would pit in opposition to NVIDIA’s Parakeet, which is by far the quickest transcription mannequin on the market.

How I did it

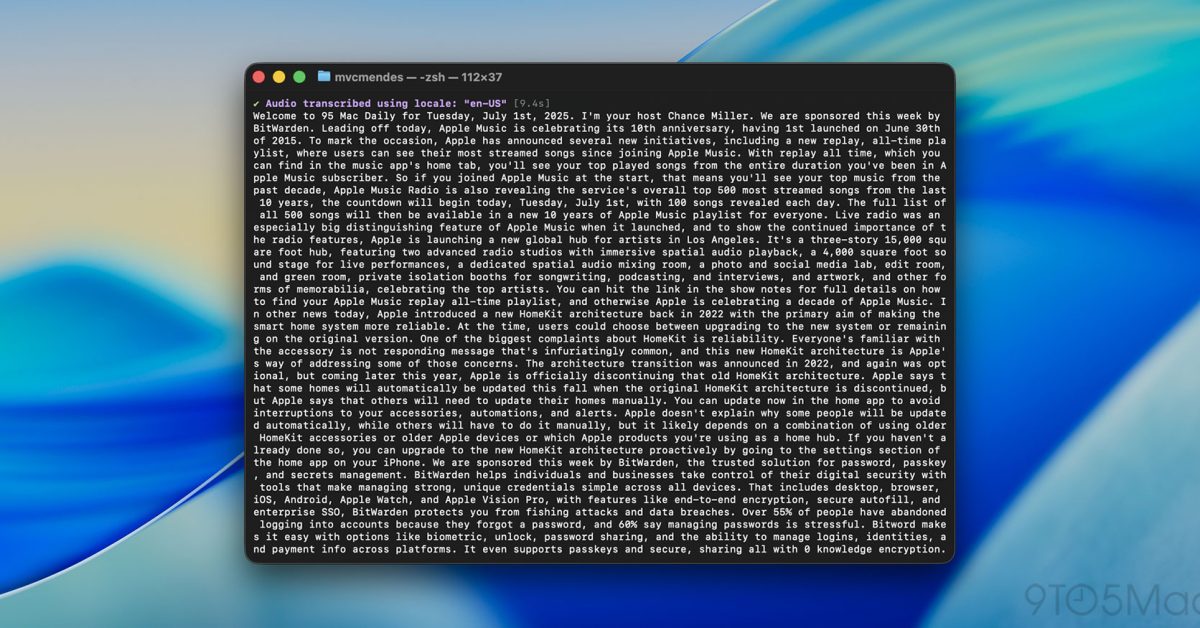

Since I’m not a local English speaker both, I made a decision to make use of a current 9to5Mac Every day episode, which was 7:31 lengthy.

I used MacWhisper to run OpenAI’s Whisper Massive V3 Turbo, and NVIDIA’s Parakeet v2. For Apple’s speech API, I used Finn Vorhees’ glorious Yap undertaking. I ran them on my M2 Professional MacBook Professional with 16GB of RAM.

For the precise Character Error Price (CER) and Phrase Error Price (WER) evaluation, since there are a lot of methods to calculate character and phrase error charges, (eg:Do you normalize spacing? Do you ignore casing? Do you ignore punctuation?) I turned to those two Hugging Face Areas: Metric: cer, and Metric: wer.

Each define their methodology on their respective pages, so I gained’t go into it right here. What issues is that every one fashions have been evaluated utilizing the identical strategy, which helps make sure that the baseline is constant and the general tendencies stay dependable, even when the precise numbers would differ beneath barely completely different strategies.

How they did

| Mannequin | Transcription time | Character Error Price | Phrase Error Price |

| Parakeet v2 | 2 seconds | 5.8% | 12.3% |

| Whisper Massive V3 Turbo | 40 seconds | 0.2% | 1.5% |

| Apple | 9 seconds | 1.9% | 10.3% |

Taking issues a bit additional, I made a decision to have ChatGPT, Claude, and Gemini additionally calculate CER and WER. Listed below are the outcomes, together with the self-reported description of the methodology:

ChatGPT (o4-mini-high)

Reference (“baseline”) and every speculation (Apple, Parakeet, Whisper) have been first normalized by:

- Changing all textual content to decrease case

- Eradicating all punctuation (durations, commas, quotes, and so on.)

- Collapsing any runs of whitespace to a single area

- This ensures we’re evaluating solely the phrases (or characters) themselves, not formatting or capitalization.

| Mannequin | Transcription time | Character Error Price | Phrase Error Price |

| Parakeet v2 | 2 seconds | 6.0% | 12.3% |

| Whisper Massive V3 Turbo | 40 seconds | 0.4% | 1.4% |

| Apple | 9 seconds | 2.1% | 10.2% |

Claude (Sonnet 4)

Textual content Normalization Protocol: Earlier than computing error charges, all texts endure standardized normalization to make sure honest comparability:

- Case normalization: Convert to lowercase

- Punctuation standardization:

- Normalize curly quotes (‘ ‘) to straight apostrophes (‘)

- Normalize curly quotes (” “) to straight quotes (“)

- Normalize em-dashes (–) to hyphens (-)

- Whitespace normalization: Change a number of whitespace characters with single areas

- Trimming: Take away main and trailing whitespace

Word: I attempted to make use of Claude 4 Opus, however bought error messages as a result of server capability limits on Anthropic’s finish.

| Mannequin | Transcription time | Character Error Price | Phrase Error Price |

| Parakeet v2 | 2 seconds | 8.4% | 11.0% |

| Whisper Massive V3 Turbo | 40 seconds | 0.1% | 1.0% |

| Apple | 9 seconds | 3.5% | 8.2% |

Gemini (2.5 Professional)

All texts (each the baseline and the speculation recordsdata) have been normalized to make sure a good comparability. The normalization course of included:

- Changing all textual content to lowercase.

- Eradicating all punctuation (e.g., ,, ., ?, ‘, “).

- Changing hyphens with areas (e.g., “end-to-end” turns into “finish to finish”).

| Mannequin | Transcription time | Character Error Price | Phrase Error Price |

| Parakeet v2 | 2 seconds | 7.6% | 12.3% |

| Whisper Massive V3 Turbo | 40 seconds | 0.3% | 0.4% |

| Apple | 9 seconds | 3.4% | 5.3% |

So which is best?

Right here’s no one’s favourite reply: it relies upon.

Whisper is clearly essentially the most correct of the three, however in the event you’re engaged on a time-sensitive undertaking and transcribing one thing moderately longer, the processing time could be an issue.

Parakeet, however, is certainly your greatest guess when velocity issues greater than precision. Say you’ve bought the recording of a two-hour lecture, and simply want a fast option to discover a particular section. In that case, giving up some precision for velocity could be the best way to go.

Apple’s mannequin lands in the midst of the highway, however not in a foul manner. It’s nearer to Parakeet by way of velocity, but already manages to outperform it on accuracy. That’s fairly good for a primary crack at it.

Granted, it’s nonetheless a far cry from Whisper, particularly for high-stakes transcription work that requires minimal or no guide changes. However the truth that it runs natively, with no reliance on third-party APIs or exterior installs, is a giant deal, particularly as developer adoption ramps up and Apple continues to iterate.

Accent offers on Amazon

FTC: We use revenue incomes auto affiliate hyperlinks. Extra.