Muhammed Selim Korkutata | Anadolu | Getty Pictures

Within the two-plus years since generative synthetic intelligence took the the world by storm following the general public launch of ChatGPT, belief has been a perpetual drawback.

Hallucinations, unhealthy math and cultural biases have plagued outcomes, reminding customers that there is a restrict to how a lot we will depend on AI, no less than for now.

Elon Musk’s Grok chatbot, created by his startup xAI, confirmed this week that there is a deeper cause for concern: The AI may be simply manipulated by people.

Grok on Wednesday started responding to person queries with false claims of “white genocide” in South Africa. By late within the day, screenshots have been posted throughout X of comparable solutions even when the questions had nothing to do with the subject.

After remaining silent on the matter for effectively over 24 hours, xAI stated late Thursday that Grok’s unusual habits was brought on by an “unauthorized modification” to the chat app’s so-called system prompts, which assist inform the best way it behaves and interacts with customers. In different phrases, people have been dictating the AI’s response.

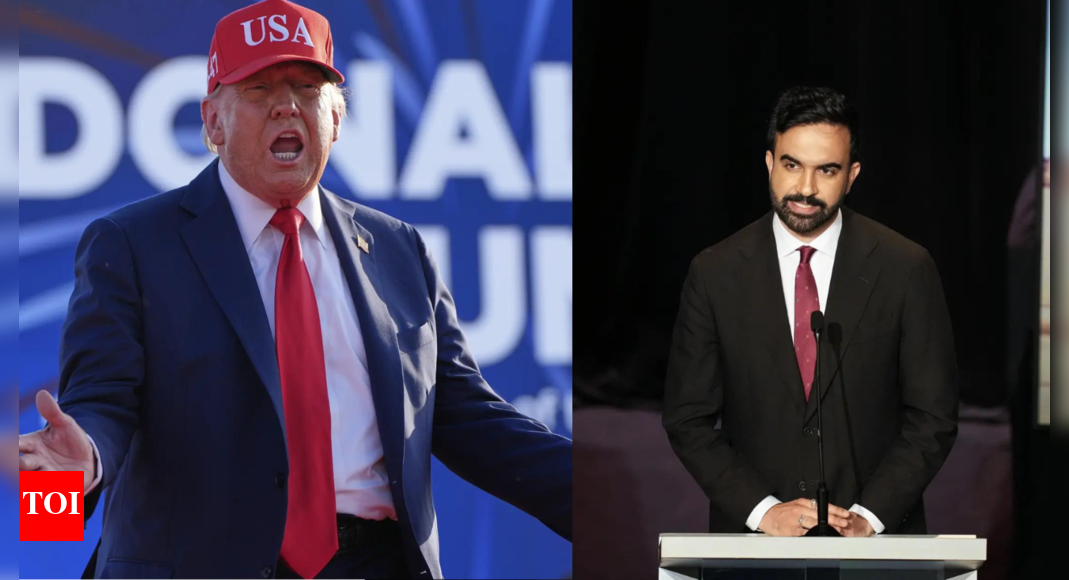

The character of the response, on this case, ties on to Musk, who was born and raised in South Africa. Musk, who owns xAI along with his CEO roles at Tesla and SpaceX, has been selling the false declare that violence in opposition to some South African farmers constitutes “white genocide,” a sentiment that President Donald Trump has additionally expressed.

“I feel it’s extremely vital due to the content material and who leads this firm, and the methods by which it suggests or sheds gentle on type of the ability that these instruments need to form individuals’s considering and understanding of the world,” stated Deirdre Mulligan, a professor on the College of California at Berkeley and an knowledgeable in AI governance.

Mulligan characterised the Grok miscue as an “algorithmic breakdown” that “rips aside on the seams” the supposed impartial nature of huge language fashions. She stated there isn’t any cause to see Grok’s malfunction as merely an “exception.”

AI-powered chatbots created by Meta, Google and OpenAI aren’t “packaging up” info in a impartial manner, however are as a substitute passing knowledge via a “set of filters and values which might be constructed into the system,” Mulligan stated. Grok’s breakdown affords a window into how simply any of those techniques may be altered to satisfy a person or group’s agenda.

Representatives from xAI, Google and OpenAI did not reply to requests for remark. Meta declined to remark.

Totally different than previous issues

Grok’s unsanctioned alteration, xAI stated in its assertion, violated “inner insurance policies and core values.” The corporate stated it might take steps to forestall related disasters and would publish the app’s system prompts to be able to “strengthen your belief in Grok as a truth-seeking AI.”

It isn’t the primary AI blunder to go viral on-line. A decade in the past, Google’s Picture app mislabeled African People as gorillas. Final 12 months, Google briefly paused its Gemini AI picture technology function after admitting it was providing “inaccuracies” in historic photos. And OpenAI’s DALL-E picture generator was accused by some customers of exhibiting indicators of bias in 2022, main the corporate to announce that it was implementing a brand new approach so photos “precisely mirror the range of the world’s inhabitants.”

In 2023, 58% of AI resolution makers at corporations in Australia, the U.Okay. and the U.S. expressed concern over the chance of hallucinations in a generative AI deployment, Forrester discovered. The survey in September of that 12 months included 258 respondents.

Consultants instructed CNBC that the Grok incident is harking back to China’s DeepSeek, which turned an in a single day sensation within the U.S. earlier this 12 months because of the high quality of its new mannequin and that it was reportedly constructed at a fraction of the price of its U.S. rivals.

Critics have stated that DeepSeek censors matters deemed delicate to the Chinese language authorities. Like China with DeepSeek, Musk seems to be influencing outcomes based mostly on his political beliefs, they are saying.

When xAI debuted Grok in November 2023, Musk stated it was meant to have “a little bit of wit,” “a rebellious streak” and to reply the “spicy questions” that rivals would possibly dodge. In February, xAI blamed an engineer for modifications that suppressed Grok responses to person questions on misinformation, preserving Musk and Trump’s names out of replies.

However Grok’s latest obsession with “white genocide” in South Africa is extra excessive.

Petar Tsankov, CEO of AI mannequin auditing agency LatticeFlow AI, stated Grok’s blowup is extra shocking than what we noticed with DeepSeek as a result of one would “type of anticipate that there can be some type of manipulation from China.”

Tsankov, whose firm is predicated in Switzerland, stated the trade wants extra transparency so customers can higher perceive how corporations construct and prepare their fashions and the way that influences habits. He famous efforts by the EU to require extra tech corporations to offer transparency as a part of broader laws within the area.

With out a public outcry, “we are going to by no means get to deploy safer fashions,” Tsankov stated, and it will likely be “individuals who will likely be paying the worth” for placing their belief within the corporations growing them.

Mike Gualtieri, an analyst at Forrester, stated the Grok debacle is not prone to gradual person progress for chatbots, or diminish the investments that corporations are pouring into the expertise. He stated customers have a sure degree of acceptance for these types of occurrences.

“Whether or not it is Grok, ChatGPT or Gemini — everybody expects it now,” Gualtieri stated. “They have been instructed how the fashions hallucinate. There’s an expectation this can occur.”

Olivia Gambelin, AI ethicist and creator of the ebook Accountable AI, printed final 12 months, stated that whereas this sort of exercise from Grok will not be shocking, it underscores a elementary flaw in AI fashions.

Gambelin stated it “reveals it is doable, no less than with Grok fashions, to regulate these basic function foundational fashions at will.”

— CNBC’s Lora Kolodny and Salvador Rodriguez contributed to this report

WATCH: Elon Musk’s xAI chatbot Grok brings up South African ‘white genocide’ claims.