The cybersecurity panorama has been dramatically reshaped by the appearance of generative AI. Attackers now leverage massive language fashions (LLMs) to impersonate trusted people and automate these social engineering ways at scale.

Let’s overview the standing of those rising assaults, what’s fueling them, and really forestall, not detect, them.

The Most Highly effective Individual on the Name Would possibly Not Be Actual

Current risk intelligence stories spotlight the rising sophistication and prevalence of AI-driven assaults:

On this new period, belief cannot be assumed or merely detected. It have to be confirmed deterministically and in real-time.

Why the Downside Is Rising

Three tendencies are converging to make AI impersonation the subsequent massive risk vector:

- AI makes deception low-cost and scalable: With open-source voice and video instruments, risk actors can impersonate anybody with just some minutes of reference materials.

- Digital collaboration exposes belief gaps: Instruments like Zoom, Groups, and Slack assume the individual behind a display screen is who they declare to be. Attackers exploit that assumption.

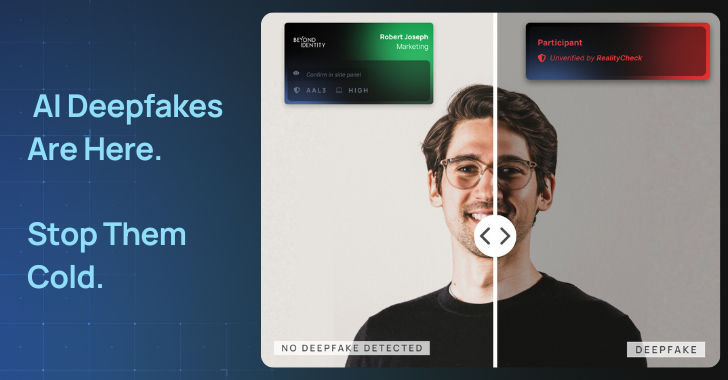

- Defenses usually depend on chance, not proof: Deepfake detection instruments use facial markers and analytics to guess if somebody is actual. That is not ok in a high-stakes surroundings.

And whereas endpoint instruments or person coaching could assist, they don’t seem to be constructed to reply a essential query in real-time: Can I belief this individual I’m speaking to?

AI Detection Applied sciences Are Not Sufficient

Conventional defenses deal with detection, similar to coaching customers to identify suspicious habits or utilizing AI to investigate whether or not somebody is faux. However deepfakes are getting too good, too quick. You may’t battle AI-generated deception with probability-based instruments.

Precise prevention requires a distinct basis, one based mostly on provable belief, not assumption. Meaning:

- Identification Verification: Solely verified, approved customers ought to be capable to be a part of delicate conferences or chats based mostly on cryptographic credentials, not passwords or codes.

- System Integrity Checks: If a person’s machine is contaminated, jailbroken, or non-compliant, it turns into a possible entry level for attackers, even when their id is verified. Block these units from conferences till they’re remediated.

- Seen Belief Indicators: Different contributors have to see proof that every individual within the assembly is who they are saying they’re and is on a safe machine. This removes the burden of judgment from finish customers.

Prevention means creating situations the place impersonation is not simply laborious, it is unimaginable. That is the way you shut down AI deepfake assaults earlier than they be a part of high-risk conversations like board conferences, monetary transactions, or vendor collaborations.

| Detection-Primarily based Method | Prevention Method |

|---|---|

| Flag anomalies after they happen | Block unauthorized customers from ever becoming a member of |

| Depend on heuristics & guesswork | Use cryptographic proof of id |

| Require person judgment | Present seen, verified belief indicators |

Get rid of Deepfake Threats From Your Calls

RealityCheck by Past Identification was constructed to shut this belief hole inside collaboration instruments. It provides each participant a visual, verified id badge that is backed by cryptographic machine authentication and steady threat checks.

At present out there for Zoom and Microsoft Groups (video and chat), RealityCheck:

- Confirms each participant’s id is actual and approved

- Validates machine compliance in actual time, even on unmanaged units

- Shows a visible badge to indicate others you have been verified

If you wish to see the way it works, Past Identification is internet hosting a webinar the place you’ll be able to see the product in motion. Register right here!